Managing multiple hardware versions of your device with Yocto and Mender

These days, managing a robust and coherent update deployment pipeline across multiple hardware variants is a big challenge for many device manufacturers and device fleet managers. These variants can appear from the outside to be using the same hardware parts, but on the inside the parts can differ. There are 2 key reasons for this: first one, there are ever-shortening timeframes for hardware availability to deprecation, which sometimes requires numerous iterations over a product’s lifecycle, and second, depending on the scale of the product, replacing a high-cost part such as a SoM with a more cost efficient one can lead to substantial savings.

.

This article shows you how to do a careful setup and integration of an arbitrary number of board support packages into a Yocto build. It also highlights the benefits that Mender provides for rolling out the resulting artifacts.

Multiple hardware variants in the product life cycle

In order to address the challenges arising from multiple hardware variants, we must first understand what those variants actually are, and why they are introduced over the lifecycle of many products. A hardware variant refers to any revision or replacement of the board running Linux in your device which is not a completely transparent drop-in. This specifically means any kind of noticeable difference that needs to be addressed by the software running on the device.

Examples for such changes could be:

- architectural: changing the system-on-chip or system-on-module for availability

- improvement: changing the storage technology for increased robustness

- financial: replacing a piece of analog circuitry for cost optimization

Regardless of how great the impact of the variant is, when it comes to shipping software (as opposed to creating software), a change that only requires one bit to be flipped in the bootloader requires just as much effort as one that completely changes the instruction set and silicon platform.

If a change in the hardware doesn’t require handling in software, then it is not a hardware variant. Such changes can be big, such as:

- Changing power supply: deriving a 110V-variant from a 230V-powered device

- Physical change: putting a device into a rugged enclosure for harsh environments

- Changing for optimization: replacing a storage chip with a compatible derivative in a smaller or more beneficial packaging.

The storage chip appears in both lists. Often it is not completely clear from the beginning if a change actually requires being handled as a hardware variant. Often the differences between otherwise “compatible” devices are still there but very subtle. There needs to be close coordination between the hardware and software integration teams, and a solid way of tracking and handling these variants in the field. Both are absolutely crucial for managing software support over the product lifecycle.

Multiple hardware variants & software deployment

When dealing with those hardware variants, the challenges of creating the corresponding software packages and deploying them are often overlooked. The most easily missed part is the matching of a hardware variant to the deployed software package or artifact. This is mostly down to a user experience in daily life that doesn’t expose this possible mismatch as being problematic. Trying to install an incompatible application that has been downloaded on your laptop shows an error message, and nothing bad happens. Smartphones take another step and only offer applications or updates that are explicitly compatible with the device in question. This is already a good example of how artifact matching works in over-the-air (OTA) scenarios where robustness is crucial, and physical user interaction is often unavailable. In the area of embedded devices, the procedures often use concepts adopted from the smartphone world. As with smartphones, artifacts for embedded devices should only be deployed if they match the device. This applies to both updates in the field, as well as during the manufacturing process. Taking into account the partition layout with A/B scheme that Mender uses for providing robust system updates, we can see that for any release, we need to provide at least two artifacts for each hardware variant. The two would be the initial image and the system update image. These artifacts need to be marked as compatible for a specific hardware variant across both the initial image and update, and this needs to be consistent over the whole maintenance period. Having a careful setup that integrates Yocto BSPs into a Mender deployment pipeline helps with this.

Board support packages (BSP)

When it comes to creating software for a specific hardware variant, the corresponding board support package (BSP) plays a major role. The BSP needs to offer everything that is required to bring a specific hardware variant into operation. At a bare minimum, this can be a well-chosen set of configuration files in cases where the board is already properly supported in the wider software ecosystem. Most BSPs include at least the following:

- configuration and patches for the bootloader and the Linux kernel

- storage layout and artifact creation mechanism

Depending on the board, things often included with the BSP are:

- driver packages for graphics or network

- utilities for accessing specialized hardware peripherals

- tools required for board-specific artifact generation.

A good BSP brings as much as needed, and as little as possible. This means it should include anything that a user needs to work on a board, but nothing more. For developers, it is a major headache to deal with BSPs that go beyond the required, and affect a software build beyond the hardware-related areas. Therefore, a good BSP has only an effect if the hardware it supports is actually targeted, and is completely inert otherwise.

A BSP in the Yocto Project

Let’s now look at the actual code and examine how a BSP for usage with the Yocto Project is shipped. Almost every piece of software in the Yocto ecosystem is shipped in the form of a metadata layer, and BSPs are no different here. Even right in Yocto’s reference distribution poky, there’s already a BSP layer included. So let's inspect that.

$ tree poky/meta-yocto-bsp/

poky/meta-yocto-bsp/

├── conf

│ ├── layer.conf

│ └── machine

│ ├── beaglebone-yocto.conf

│ ├── genericarm64.conf

│ ├── genericx86-64.conf

│ ├── genericx86.conf

│ └── include

│ └── genericx86-common.inc

├── lib

│ └── oeqa

│ ├── controllers

│ │ ├── beaglebonetarget.py

│ │ ├── grubtarget.py

│ │ └── __init__.py

│ ├── runtime

│ │ └── cases

│ │ ├── parselogs-ignores-beaglebone-yocto.txt

│ │ └── parselogs-ignores-genericx86-64.txt

│ └── selftest

│ └── cases

│ └── systemd_boot.py

├── README.hardware.md

├── recipes-bsp

│ └── formfactor

│ ├── formfactor

│ │ ├── beaglebone-yocto

│ │ │ └── machconfig

│ │ ├── genericx86

│ │ │ └── machconfig

│ │ └── genericx86-64

│ │ └── machconfig

│ └── formfactor_0.0.bbappend

├── recipes-graphics

│ └── xorg-xserver

│ ├── xserver-xf86-config

│ │ ├── beaglebone-yocto

│ │ │ └── xorg.conf

│ │ ├── genericx86

│ │ │ └── xorg.conf

│ │ └── genericx86-64

│ │ └── xorg.conf

│ └── xserver-xf86-config_0.1.bbappend

├── recipes-kernel

│ └── linux

│ ├── linux-yocto_6.6.bbappend

│ └── linux-yocto-dev.bbappend

└── wic

├── beaglebone-yocto.wks

├── genericarm64.wks.in

└── genericx86.wks.in

25 directories, 26 filesJust by glancing over the directory structure, you can easily understand that this layer ships not just one, but actually 4 BSPs: beaglebone-yocto, edgerouter, genericx86 and genericx86-64. This highlights the rule about BSPs being inert: by adding the layer to the build setup, all of the included layers become available but do not affect each other.

Looking at the structure in more detail, it becomes apparent that the core piece of each BSP is the configuration file in the conf/machine directory. By convention, the filename of the machine configuration file matches the value being used by the build for the MACHINE variable. We will also follow this practice in order to avoid friction.

Given this structure, the machine configuration file is the neuralgic point for understanding board support. Let‘s look at the beaglebone-yocto one for example:

<snip>

IMAGE_FSTYPES += "tar.bz2 jffs2 wic wic.bmap"

EXTRA_IMAGECMD:jffs2 = "-lnp "

WKS_FILE ?= "beaglebone-yocto.wks"

MACHINE_ESSENTIAL_EXTRA_RDEPENDS += "kernel-image kernel-devicetree"

do_image_wic[depends] += "mtools-native:do_populate_sysroot dosfstools-native:do_populate_sysroot virtual/bootloader:do_deploy"

<snip>

PREFERRED_PROVIDER_virtual/kernel ?= "linux-yocto"

PREFERRED_VERSION_linux-yocto ?= "6.6%"

KERNEL_IMAGETYPE = "zImage"

DTB_FILES = "am335x-bone.dtb am335x-boneblack.dtb am335x-bonegreen.dtb"

KERNEL_DEVICETREE = '${@' '.join('ti/omap/%s' % d for d in '${DTB_FILES}

PREFERRED_PROVIDER_virtual/bootloader ?= "u-boot"

<snip>There are some additional things in the file, but this excerpt shows the core functionality. Variables starting in PREFERRED_PROVIDER select a source package to provide a specific functionality. In this case, for both the bootloader and the kernel, these are the standard varieties included with the Yocto Project. As this is an ARM-based board, a so-called device tree is selected too. A full explanation of the device tree concept is beyond the scope of this article, so we will link to appropriate resources for further information on this topic. The device tree is the main source of information on hardware that the Linux kernel relies on to work. Therefore in the context of hardware variants it is often needed to take care of the device tree in order to handle the differences across variants.

The last important section displayed is the setup of the actual generated image. This includes the filesystem and the partition layout, the latter often indirectly through a wks file. In many cases hardware variants only differ in terms of the storage available on the device, so adjusting the corresponding settings is also a common thing in machine configurations.

Application platform layer

So far we have covered only things that are supplied to us by third parties, such as the Yocto Project layers, or BSP layers from the hardware vendors. You should avoid modifying them so that you retain the possibility of updating them without manual intervention, e.g. manually reapplying your modifications. Consequently, we put all of our additions and modifications into an additional layer that we maintain ourselves. The following assumes that you already have a metadata layer in which you are organizing the base build for your device. If not, you can create a boilerplate with the bitbake-layers create-layer command. For more information on the full procedure, please refer to the Yocto Project documentation. The rest of this article will use a layer called meta-mender-multiplatform.

Adding to the BSP-provided machine

By creating a machine configuration in your layer you can build upon and extend the provided machine. We will do so for the beaglebone-yocto machine, to archive two things:

- We want the machine name to be different. In a real project, you would probably choose a name that refers to the product. For the explanatory line in this article, we will just prefix the original machine name with

mmp-, short formender multiplatform, but this can be any arbitrary name that follows the requirements for Yocto build. - We want to place all machine specific information in here that higher parts of our build stack depend upon. A good example is hardware specific information about storage that Mender relies upon. As an example, we will request 512MB of persistent storage.

The key feature in Yocto that facilitates this approach is called overrides. Those exist for both machine and distro, the former being the one we will leverage here. At this point, we have covered all the groundwork and can finally create our machine configuration file. The full path including the layer directory is given for clarity.

mender-multiplatform/conf/machine/mmp-beaglebone-yocto.conf

MACHINEOVERRIDES =. "beaglebone-yocto:"

require conf/machine/beaglebone-yocto.conf

MENDER_DATA_PART_SIZE_MB = „512“So what is happening here? The first line adds an additional string to the machine overrides. This instructs the build: „whatever you have that is specific to the machine beaglebone-yocto, we want it too“. Great, half of the work is already done! The remaining half comes in the second line. The requirement includes the original machine file, bringing all of its information into the scope of our machine. So mission number 1 is already accomplished: we have created a full drop-in replacement for the original machine under a name we chose. Now mission number 2 remains: adding additional information. This is perfectly straightforward from now on, we just add whatever we need below the require statement. This works because the engine inside the Yocto build evaluates all metadata from top to bottom. In this particular place, we can therefore change, add or adjust whatever machine wide configuration we desire.

And that‘s it! Congratulations, you have just created a customized BSP!

Deriving a custom distro

Up to this point we have only talked about things that relate to a specific hardware variant. Now what if we need to define something that should affect all variants? We could theoretically place it in an include file that we require in all machine configurations. However, in the vast majority of cases, such global adjustments do not actually relate to any machine per se, but rather define the kind of software support your application expects. This is often referred to as the API, and as such it should be defined by the distribution, not the machine. In this article, we want to have support for Mender as the OTA solution to be added across all variants. And as this clearly matches the definition of “defining the software support/API”, we want to put it into a custom distro. Again, we want our custom distro to be really similar to the common one provided by the Yocto Project, which goes by the name poky. So what to do? Right, perform another override. The mechanisms to be used are similar to extending the machine configuration.

mender-multiplatform/conf/distro/mmp-poky.conf

DISTROOVERRIDES =. "poky:"

require conf/distro/poky.conf

INHERIT += "mender-full"There are hardly any surprises here anymore, right? We declare that we want to be treated like poky is, so we pull in the original, and then we add our modification.

This is the last piece we require in terms of actual code. For the sake of robustness, we should, as a last step, define the metadata dependencies of our layer.

Defining layer dependencies

Most layers in the Yocto ecosystem depend on one or more other layers. Layers themselves are configured by a special file called layer.conf, which resides in the conf directory. In this file are two variable entries that define the layers internal “collection name”, and based on this, other collections upon which the layer depends. Our layer is called mender-multiplatform, so we are also using this as the collection name:

BBFILE_COLLECTIONS += "mender-multiplatform"We can inspect the other layers that we need to depend upon for the same declaration, and put those into our dependencies list:

LAYERDEPENDS_mender-multiplatform = " \ yocto \ Yoctobsp"You might be surprised by the names here, as they haven’t been mentioned so far. This is for historic reasons. The layers bringing the original configurations for the poky distro and the beaglebone-yocto machine have been created with those collection names. Given that observation, the collection names of your dependencies can usually only be found by inspection. At this point, all of the metadata work is done.

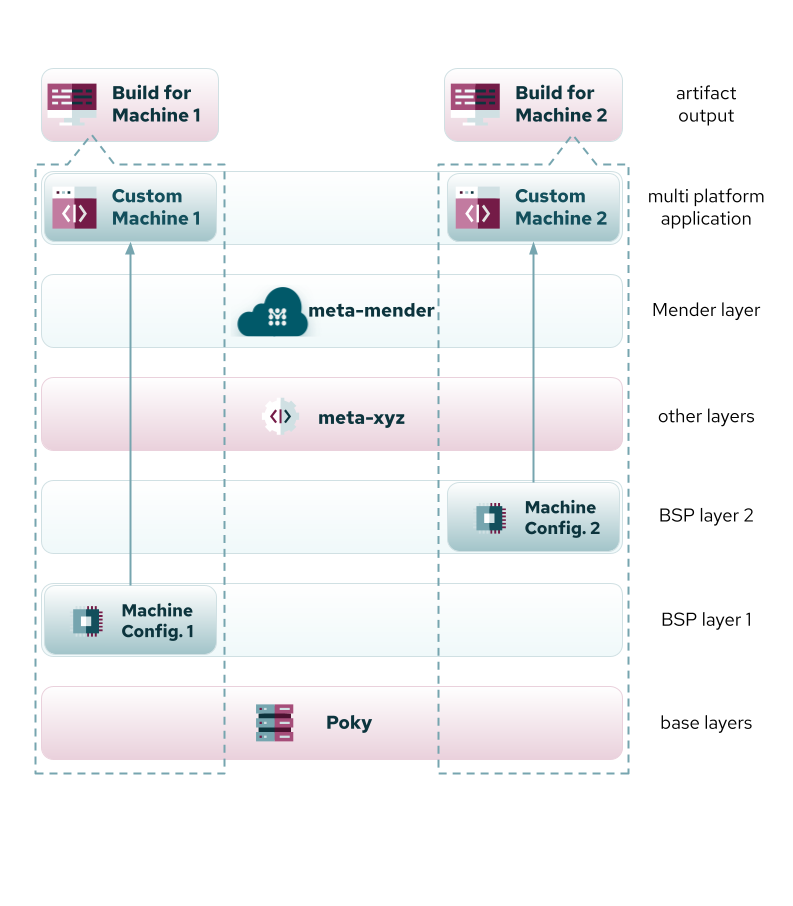

Figure 1: Organization of custom overrides in a single layer to facilitate builds for multiple machines.

Figure 1: Organization of custom overrides in a single layer to facilitate builds for multiple machines.

Bonus: automated setup with kas

If you have worked with complex setups in the past, then you understand that setting up all layers at the desired revision is an error-prone and tedious process. In the following section, we will give a short peek at the kas tool, which is one of the most popular solutions for this problem, and has the benefits of using a human readable configuration format. This will only give a glimpse of the parts relevant for this article. For more information on kas itself, please refer to its documentation.

The kas tool can be installed from the pypi repository by

$ pip install kasA simple configuration that can be supplied right inside the application layer looks like this:

header:

version: 14

distro: mmp-poky

defaults:

repos:

branch: scarthgap

repos:

mender-multiplatform:

poky:

url: https://git.yoctoproject.org/git/poky

layers:

meta:

meta-poky:

meta-yocto-bsp:

meta-openembedded:

url: https://git.openembedded.org/meta-openembedded

layers:

meta-oe:

meta-mender:

url: https://github.com/mendersoftware/meta-mender.git

layers:

meta-mender-core:

meta-mender-demo:

meta-lts-mixins:

url: https://git.yoctoproject.org/meta-lts-mixins

branch: scarthgap/u-boot

meta-mender-community:

url: https://github.com/mendersoftware/meta-mender-community.git

layers:

meta-mender-beaglebone:

meta-mender-raspberrypi:

meta-raspberrypi:

url: https://github.com/agherzan/meta-raspberrypi.git

local_conf_header:

base: |

CONF_VERSION = "2"

PACKAGE_CLASSES = "package_ipk"

MENDER_ARTIFACT_NAME = "release-1"

machine: unset

target:

- core-image-minimalThe special twist in the multi hardware variant setting is to use kas “only” as the setup tool, but not to run the actual build. This is facilitated by the MACHINE: unset setting. Including that effectively prohibits kas from running the build unintentionally, as it will stop early without a machine being selected.

With all the pieces in place, a build for a number of machines looks like this:

- Setting up the defined layers and configuration

$ kas checkout kas.yml - Load build environment

$ source poky/oe-init-build-env - Build for machine

mmp-beaglebone-yocto $ MACHINE=mmp-beaglebone-yocto bitbake core-image-minimal - Repeat step 3 for all needed hardware variants.

By using a setup like this, you can combine easy reproduction of the build as kas takes care of it, with optimal reuse of the resulting SSTATE cache across all of your hardware variants. The latter is especially useful if you are doing release builds in a CI pipeline and want to avoid sharing a cache across different or subsequent runs in order to verify the integrity of your build pipeline.

Conclusion

In this article, we took a look at BSPs in the context of the Yocto Project. From there, we identified the need to build upon and extend those provided by hardware vendors. By deriving custom machines from the existing ones, we can ensure easy maintenance and optimal matching of hardware variants to the Mender deployment pipeline. As an additional benefit, this can provide artifacts for more than one target device in a single build setup which can substantially shorten the needed time for CI pipeline runs without sacrificing reproducibility.

Next steps

Visit Josef’s source repo on Yocto for multi-platform and try it in conjunction with Mender for OTA updates.

Recent articles

Sustainable devices, smart innovation: How OTA updates can contribute to cutting costs and carbon

Service Provider tenant: SSO enhancements

Be one of the first to try Mender on ESP32 with Zephyr

Learn why leading companies choose Mender

Discover how Mender empowers both you and your customers with secure and reliable over-the-air updates for IoT devices. Focus on your product, and benefit from specialized OTA expertise and best practices.