Introduction

How does Kubernetes and Docker compare when using either with the on-premise Mender management server for packaging and deploying software updates over-the-air (OTA) to target embedded Linux devices (Yocto, Debian, Ubuntu)? In this article, we’ll try to answer these questions.

However, before diving into the Mender-specific use case, let’s shed some light on the differences between the various approaches.

The backend of the Mender software update manager is a containerized microservice-based application. Therefore, to run it, you need a container orchestration framework. The documentation suggests the use of Docker Compose or Kubernetes, but how do they compare? And what are the differences between Kubernetes vs Docker? Is a Kubernetes deployment recommended for a production-grade deployment? What about Docker Swarm vs Kubernetes?

Docker containers

We can start by saying, “In the beginning, there was Docker”. Its first public release dates to 2013, and it has become the de-facto standard for packaging and distributing applications. Containerized applications can run on any environment supported by the Docker engine.

Today, the Linux Foundation project Open Container Initiative (OCI) defines open standards for the Linux containers, and many alternative container runtimes exist besides Docker.

Container orchestration

As the number of containers the applications are running grows, managing them becomes increasingly challenging. The deployment, management, monitoring, configuration and communication between them, not to mention the auto scale support, exponentially increases the complexity.

For this reason, the Docker team implemented Docker Compose, a tool to define and share multi-container applications. Using Compose, you can define the services for your application in a single YAML file and spin everything up or tear it all down with a single command.

From a single host to a network of worker nodes

The most significant limitation of Docker Compose is that it runs on one host machine, representing a single point of failure. For this reason, more complex orchestration tools arose to distribute and load balance the workload on multiple worker nodes.

The Docker team implemented Docker Swarm, a clustered orchestration tool, to solve this problem. However, Swarm's functionalities are not extensive, and its adoption is limited. As Kubernetes became the most prominent project in these scenarios, we'll focus on it in this article.

What is Kubernetes?

As part of the Cloud Native Computing Foundation, Kubernetes is the most common solution for running containers over multiple computers, either virtual machines in the public cloud or physical hosts in your data center.

The major public cloud providers offer ready-to-go Kubernetes Services, which simplify the user experience by automating a cluster's setup, maintenance, and operation. In addition, they often provide the master node running the control plane as a service and let the customer focus on the configuration of the workload.

Kubernetes vs Docker Compose

You can declare the services you want to run using manifest files with both tools, usually using the YAML syntax. However, Docker Compose directly interacts with and controls the Docker engine on your local machine. At the same time, the Kubernetes tools communicate with a control plane which will orchestrate the application across multiple worker nodes.

The complexity of a Kubernetes cluster is higher than a single machine running Docker Compose. But, at the same time, a Kubernetes Cluster offers exceptionally high flexibility in configuring, managing, and scaling the workload.

Mender Server, what to choose?

You can run the Mender Server both using Docker Compose and Kubernetes. What to choose depends on the specific needs and requirements of your project.

Demo installation and evaluation

Docker Compose is probably the most appropriate choice when evaluating Mender for the first time. It is a quick and easy way to run the Mender backend without special requirements: all you need is the Docker engine and Docker Compose. Therefore, the demo setup runs on a single host and doesn’t provide high-availability.

You can follow the Demo installation page on the Mender documentation site and the instructions.

Deployment using Docker Compose

There are use cases where high availability and load-balancing are not required. In these cases, Kubernetes could be too complicated to operate, and you might prefer to use Docker Compose because of its simplicity and ease of use.

The requirements to run the Mender server using Docker Compose in a production environment are the same as we described for the demo setup. However, the installation is different as it requires the creation of Docker volumes to store the data and the provisioning and configuration of SSL certificates to be installed in the API gateway.

While it is technically possible to scale the Mender server microservices using Docker Compose and run multiple instances simultaneously load balancing the requests, the advantages of doing so are limited by the fact that Docker Compose can only run on a single host. Therefore, the scalability of a Docker Compose deployment will always depend on the resources and vertical scaling limit of the virtual or physical machine running the application.

On the other hand, a single host running the application using Docker Compose simplifies the infrastructure provisioning, maintenance, backup, and disaster recovery procedures. For instance, a full snapshot of the virtual machine running the Mender Server, stopping the application to avoid data modification in the middle of the snapshot, is enough to ensure a proper system backup.

To install the Mender Server with this setup, you can follow the Installation with Docker Compose page on our documentation site and the instructions.

Production deployment using Kubernetes

If your Mender Server deployment’s requirements include high availability and scalability, I suggest deploying it on Kubernetes.

The Mender server is designed to scale horizontally, running multiple instances of the containers to spread the load among multiple worker nodes and serve a larger fleet of devices. In addition, advanced orchestration features available in Kubernetes, for example, the Horizontal Pod Autoscaler and the Cluster Autoscaler, will automatically scale up and down the service based on the load and replace unhealthy nodes in case of failures.

Thanks to the deep integration with Cloud providers such as Azure, AWS and GCP, Kubernetes can automatically provision Cloud resources required by the application. For instance, you can easily expose the Mender server to the internet through an Application or Network Load Balancer across multiple availability zones by simply deploying your application to Kubernetes, and letting it provision the cloud resources.

Finally, using Kubernetes, your deployment will be cloud and platform-agnostic. It will solely depend on the availability of a Kubernetes cluster, either managed by the Cloud provider (for example, AWS, Azure, GCP) or on-premise.

To simplify the installation, configuration and upgrade of the Mender Server on Kubernetes, we provide a Helm chart, a ready-to-use package you can deploy to a cluster. You can follow the Production installation with Kubernetes page on our documentation site and the instructions.

Conclusion

In the end, there is no vanilla, one size fits all solution to installing a production-grade Mender server. As we described, the best strategy depends on your specific project requirements, the size of the deployment and device fleet, your team's skill set, and the available resources for operating and maintaining the system.

If you have any questions or doubts, you can rest assured there is ample community support or please feel free to contact us directly for help.

Next step

Try running the Mender server in your infrastructure with Kubernetes or Docker with guidance in the following 3 resources:

Get in contact with us if you need more help.

Recent articles

New Mender experimental AI-enabled feature

Mender in 2025: A year in review with compliance, security, and AI-driven growth

What’s new in Mender: Enhanced delta updates and user experience

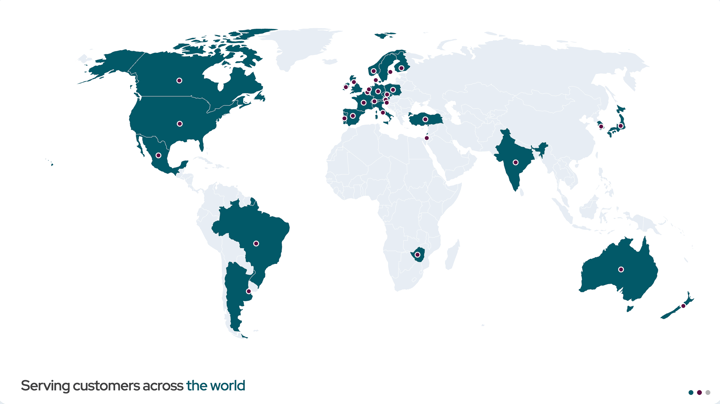

Learn why leading companies choose Mender

Discover how Mender empowers both you and your customers with secure and reliable over-the-air updates for IoT devices. Focus on your product, and benefit from specialized OTA expertise and best practices.