Why is a robust over-the-air (OTA) update process critical in today’s digital age?

.png?width=1200&height=430&name=Crowdstrike%20(1).png)

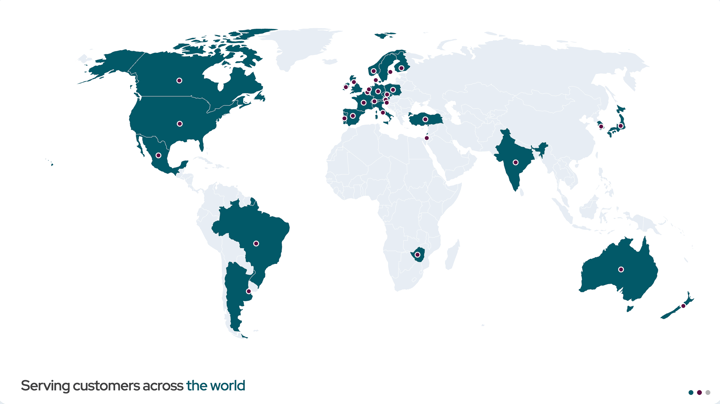

The Crowdstrike-Microsoft incidents highlight the intricacies and interdependencies of today’s digital world. From aviation and banking to healthcare and emergency services, these incidents resulted in downtime and disruptions for organizations across industries, causing many to re-think their digital resilience strategies.

“The incident has highlighted the need for improved risk management practices. Enhanced due diligence, rigorous testing of updates, and phased rollouts are now critical.”

CrowdStrike Incident Spurs CIOs To Reassess Cybersecurity

This digital evolution path is familiar. An incident occurs, analysis follows, and new best practices emerge. Perhaps uniquely, the Crowdstrike-Microsoft situation bridges what was already previously known and understood as best practices, albeit in different areas of technology application and software release management. That is, in traditional IT security, the order of priorities is confidentiality, integrity, and availability (CIA). In the traditional OT security realm, the order is control, availability, integrity, and confidentiality (CAIC). Notably, the more mission-critical the system, the higher the availability and control rank.

As such, Crowdstrike and Microsoft highlight the need to reassess previous priorities and assumptions. Organizations need to examine their digital strategy and assess their resilience.

Where to start looking at your digital resilience?

Today’s connected devices need an update process that is not just “like the desktop you know.” Devices, systems, and processes are more integrated, automated, smart, and connected. With those advancements come complexities. Complexity requires more granular and controlled management. There are two critical challenges unique for managing connected devices:

- Scale: Whereas desktops or desktop environments are somewhat finite, connected devices can be limitless. A device fleet can grow to thousands of devices depending on the use and application.

- User interaction: A user can always interact with a desktop and fix issues tangibly – manually moving the mouse, etc. However, this is almost never possible on a connected device where it is often embedded within a greater system and direct user access doesn’t exist.

The update process is fundamental to any system and the root of the Crowdstrike and Microsoft incidents. Technologically advanced software, hardware, and embedded systems require updates throughout their lifecycle. The update process serves as the spine of a connected device; from it, all other components function together. Without a robust update process, issues can happen, and parts can break down.

Therefore, the first element every digital organization needs to examine is its current update process and the level of robustness, control, and granularity within.

What are the key features of a robust update process?

The quality control (QC)/quality assurance (QA) and update process are by nature intertwined. One must rely on the other. However, a critical component in a robust update process are the failsafe mechanisms built to safeguard against, mitigate, or minimize errors earlier in the pipeline. However, as in the Crowdstrike update process, if these functions are not used or bypassed – such as automatic updates or fleetwide deployment – there is little recall available if an error does occur in QC/QA.

#1: Establish a failsafe update process – A/B updates

A/B updates are an integral building block for a robust update mechanism. The core idea is to have two versions of the main operating system: the currently active one and an inactive one. These two versions are usually implemented as storage partitions within the system or device.

As the name suggests, the active version, version A, is the one currently in operation and running. It is in a known state – operating as needed and expected.

The inactive version, B, serves two purposes.

- First, an incoming system update is written to the currently inactive version. Updating the inactive version means the system's operation is not interrupted or affected until the writing process has successfully finished. Nothing is affected if anything occurs during the update process, such as the loss of power. Only when version B is fully updated is the switchover executed, usually through a reboot during which an update-aware bootloader swaps active and inactive systems, hence booting into the updated, previously inactive Version B partition.

- The second purpose of the inactive partition comes into effect in a scenario where something goes wrong, like a faulty system being deployed. If the bootloader detects that the switch's subsequent booting process has failed, it can revert the switch and roll back to the previously active, known ‘good’ system.

Thus, establishing an A/B update process ensures the device or system remains functional before, during, and after the update process. A/B updates prevent the device from being ‘bricked’ or malfunctioning.

#2: Ensure detection and rollback independence

An often overlooked but important detail is that detection and eventual rollback, as exemplified in the update-aware bootloader above, must be independent of the actual operating system. It must function whether the operating system does or not.

If the detection and rollback functionality is only able to handle problems once the main operating system has started, then it can only tackle application-level updates. Dependent detection and rollback functions are not suitable for system updates.

If the detection and rollback functionality is independent, it can address system update issues and operate as needed in an A/B update scenario.

#3: Embrace the power of phased rollouts

While the two items above are technical measures that apply at the device level, phased rollouts are management or process elements. Phased rollouts – staged or staggered rollouts, or sometimes canary deployments – occur at the fleet level. These can also be manual or human-controlled in scenarios where the update infrastructure does not support phased rollout out of the box (i.e., deploying to only a select group).

The concept is very straightforward. Instead of sending an update to the whole device fleet, potentially thousands, all at once and immediately, the update is initially deployed to only a small fraction, like 5% of the devices. Only if no substantial problems are detected during a grace period is the update also deployed to the remainder of the device fleet.

While none of the above three elements are novel, their importance can vary significantly with the organization, device, usage or application, and fleet size. But in light of the Crowdstrike and Microsoft incidents, perhaps it is time to rethink the security and resiliency priorities.

Embracing a resilient-first strategy

As the world further embeds technology into every facet of our societal operations, it begs whether there should be two distinct disciplines: IT and OT, non-critical and critical infrastructure. Or does such a technological-dependent state exist where at least some technology is critical infrastructure, even if it is not traditionally classified as such?

Regardless, Crowdstrike and Microsoft exemplify the need for organizations to once again evolve their digital strategies beyond cybersecurity and privacy basics to embrace digital resilience. Whether IT or OT, hardware or software, updating the systems society relies on is the backbone of a digital strategy. It must be robust, granular, and fail-safe.

“...when it comes to mission-critical systems where failure is unacceptable, you need to exercise extreme caution with any upgrade...there are many ways to do a staged rollout. They include rolling updates, blue/green, canary, and A/B testing. Pick one. Make it work for your enterprise, just don’t put all your upgrades into one massive basket. Besides, robust rollback procedures are essential to revert to a stable version if problems arise quickly. Wouldn’t you have liked just to hit a button and roll back to working systems? Tens of thousands of IT staffers must wish for that now.”

Recent articles

New Mender experimental AI-enabled feature

Mender in 2025: A year in review with compliance, security, and AI-driven growth

What’s new in Mender: Enhanced delta updates and user experience

Learn why leading companies choose Mender

Discover how Mender empowers both you and your customers with secure and reliable over-the-air updates for IoT devices. Focus on your product, and benefit from specialized OTA expertise and best practices.